This is a slight adaptation of a file I did for Emily (https://www.researchgate.net/profile/Emily_Blackshaw2) back in October 2020 when she and wanted to look at whether Cronbach’s alpha for the YP-CORE varied from session to session across help-seeking clients data: a very basic exploration of longitudinal measurement invariance. I realised it was a good chance for me to pull together what I had been learning back then about piping and to share it with her.

As a page here it probably should have come before https://www.psyctc.org/Rblog/posts/2021-02-07-why-pipe-why-the-tidyverse/, or been woven into that, but I had managed to lose the file (!). However, I think it complements what I put in there and it does introduce the rowwise() function and c_across().

Show code

### this is just the code that creates the "copy to clipboard" function in the code blocks

htmltools::tagList(

xaringanExtra::use_clipboard(

button_text = "<i class=\"fa fa-clone fa-2x\" style=\"color: #301e64\"></i>",

success_text = "<i class=\"fa fa-check fa-2x\" style=\"color: #90BE6D\"></i>",

error_text = "<i class=\"fa fa-times fa-2x\" style=\"color: #F94144\"></i>"

),

rmarkdown::html_dependency_font_awesome()

)As is my wont, I prefer to explore methods with simulated data so the first step was to make such data. Here I am simulating 500 clients each having ten sessions and just a five item questionnaire (the YP-CORE has ten items but five is quicker and fits output more easily!)

Show code

### make some nonsense data

library(tidyverse)

nParticipants <- 500

nSessions <- 10

### give myself something to start with: the sessions

session <- rep(1:nSessions, nParticipants) # 1,2,3 ...10, 1,23 ...10 ...

session %>%

as_tibble() %>% # turn from vector to tibble, that means I have rename it back to the vector name!

rename(session = value) %>%

mutate(baseVar = rnorm(nParticipants*nSessions), # this creates a new variable in the tibble and sort of reminds me that variables may be vectors

item1 = baseVar + 0.7*rnorm(nParticipants*nSessions), # creates a first item

item2 = baseVar + 0.7*rnorm(nParticipants*nSessions), # and a second

item3 = baseVar + 0.7*rnorm(nParticipants*nSessions), # and a third

item4 = baseVar + 0.7*rnorm(nParticipants*nSessions), # and a 4th ...

item5 = baseVar + 0.7*rnorm(nParticipants*nSessions)) -> tmpDat

### look at it

tmpDat# A tibble: 5,000 × 7

session baseVar item1 item2 item3 item4 item5

<int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 1 -1.09 -1.54 -0.487 -2.37 -2.07 -1.23

2 2 -1.13 -1.06 -1.53 -0.369 -1.02 -1.57

3 3 0.0984 -0.473 1.13 -0.752 -0.617 0.427

4 4 -0.933 -0.426 -1.30 -0.354 -1.41 -1.56

5 5 -0.957 -0.734 -0.692 0.417 -2.21 -1.08

6 6 0.507 1.27 0.247 -0.124 0.493 0.654

7 7 -1.40 -1.58 -1.22 -1.71 -1.92 -1.42

8 8 -1.26 -1.19 -1.42 -0.689 -1.03 -0.623

9 9 -0.914 -2.33 -0.579 -1.35 -1.01 -0.917

10 10 -0.397 0.127 -0.471 -1.21 -1.11 0.0150

# ℹ 4,990 more rowsShow code

### check the simple correlation

cor(tmpDat[, 3:7]) item1 item2 item3 item4 item5

item1 1.0000000 0.6591352 0.6620830 0.6644586 0.6553199

item2 0.6591352 1.0000000 0.6707748 0.6674330 0.6641176

item3 0.6620830 0.6707748 1.0000000 0.6696733 0.6532232

item4 0.6644586 0.6674330 0.6696733 1.0000000 0.6562823

item5 0.6553199 0.6641176 0.6532232 0.6562823 1.0000000Show code

### OK, I can play with that, here's the overall alpha (meaningless even for the simulation really but just checking)

psychometric::alpha(tmpDat[, 3:7])[1] 0.9074184OK. Now I could start playing with the data in the tidyverse/dplyr/piping way. The key thing to remember is that the default behaviour of mutate() or summarise() within group_by() in dplyr is for a function to act on a vertical vector, i.e. on a variable

# A tibble: 10 × 2

session mean1

<int> <dbl>

1 1 0.0506

2 2 -0.0308

3 3 0.0654

4 4 0.0302

5 5 0.0654

6 6 -0.0260

7 7 0.0841

8 8 0.0172

9 9 -0.00995

10 10 0.0374 So that simply got us the mean for item1 across all completions but broken down by session. Trivial dplyr/piping but I still find it satisfying in syntax and in its utility.

As introduced in https://www.psyctc.org/Rblog/posts/2021-02-07-why-pipe-why-the-tidyverse/, if I have a function that returns more than one value dplyr handles this nicely but I have to tell it the function is creating a list (even if it’s just a vector), as below. The catch to remember is that you then have to unnest() the list to see its values, usually unnest_wider() is what I want but there is unnest_longer().

Show code

# A tibble: 10 × 7

session Min. `1st Qu.` Median Mean `3rd Qu.` Max.

<int> <table[1d]> <table[1d]> <table[1d]> <tab> <table[1> <tab>

1 1 -3.353517 -0.8363637 9.082438e-02 0.0… 0.8494070 3.26…

2 2 -3.436229 -0.8174509 -4.555271e-02 -0.0… 0.7188596 3.95…

3 3 -3.348931 -0.8026705 4.833607e-02 0.0… 0.8942703 3.70…

4 4 -4.154733 -0.7494581 -7.354608e-05 0.0… 0.9130740 3.55…

5 5 -3.538548 -0.7582946 4.144949e-02 0.0… 0.9318839 3.56…

6 6 -3.784561 -0.8802649 -9.139588e-02 -0.0… 0.8072120 3.33…

7 7 -3.943631 -0.7611005 3.655201e-02 0.0… 0.9274534 3.26…

8 8 -3.973647 -0.8250926 1.890075e-02 0.0… 0.8383157 3.07…

9 9 -3.822690 -0.8469892 -1.396183e-02 -0.0… 0.8386874 3.69…

10 10 -3.746684 -0.8208357 1.702821e-02 0.0… 0.8848634 3.88…Show code

### names are messy but it is easy to solve that ...

tmpDat %>%

group_by(session) %>%

summarise(summary1 = list(summary(item1))) %>%

unnest_wider(summary1) %>%

### sometimes you have to clean up names that start

### with numbers or include spaces if you want to avoid backtick quoting

rename(Q1 = `1st Qu.`,

Q3 = `3rd Qu.`)# A tibble: 10 × 7

session Min. Q1 Median Mean Q3 Max.

<int> <table[1d]> <table[1d]> <table[1d]> <table[1> <tab> <tab>

1 1 -3.353517 -0.8363637 9.082438e-02 0.05058… 0.84… 3.26…

2 2 -3.436229 -0.8174509 -4.555271e-02 -0.03081… 0.71… 3.95…

3 3 -3.348931 -0.8026705 4.833607e-02 0.06544… 0.89… 3.70…

4 4 -4.154733 -0.7494581 -7.354608e-05 0.03017… 0.91… 3.55…

5 5 -3.538548 -0.7582946 4.144949e-02 0.06537… 0.93… 3.56…

6 6 -3.784561 -0.8802649 -9.139588e-02 -0.02595… 0.80… 3.33…

7 7 -3.943631 -0.7611005 3.655201e-02 0.08406… 0.92… 3.26…

8 8 -3.973647 -0.8250926 1.890075e-02 0.01721… 0.83… 3.07…

9 9 -3.822690 -0.8469892 -1.396183e-02 -0.00994… 0.83… 3.69…

10 10 -3.746684 -0.8208357 1.702821e-02 0.03740… 0.88… 3.88…Again, as I introduced in https://www.psyctc.org/Rblog/posts/2021-02-07-why-pipe-why-the-tidyverse/, I can extend this to handle more than one vector/variable at a time if they’re similar and I’m doing the same to each.

# A tibble: 10 × 6

session item1 item2 item3 item4 item5

<int> <dbl> <dbl> <dbl> <dbl> <dbl>

1 1 0.0506 0.0243 -0.0401 0.00924 0.0664

2 2 -0.0308 -0.00961 -0.0478 -0.0337 -0.00562

3 3 0.0654 0.0956 0.131 0.0698 0.117

4 4 0.0302 0.00609 0.0183 0.0554 -0.00705

5 5 0.0654 0.0467 0.0971 0.121 0.0412

6 6 -0.0260 -0.0173 -0.0375 -0.00194 -0.0291

7 7 0.0841 0.0274 -0.0109 -0.0346 0.0288

8 8 0.0172 -0.0477 -0.0143 -0.00567 -0.0279

9 9 -0.00995 -0.0105 0.0847 0.0298 0.00826

10 10 0.0374 0.000706 -0.0347 -0.0258 0.0136 I can also do that with the following syntax. I have not yet really understood why the help for across() gives that one with function syntax (“~”) and the explicit call of “.x) rather than this and I really ought to get my head around the pros and cons of each.

# A tibble: 10 × 6

session item1 item2 item3 item4 item5

<int> <dbl> <dbl> <dbl> <dbl> <dbl>

1 1 0.0506 0.0243 -0.0401 0.00924 0.0664

2 2 -0.0308 -0.00961 -0.0478 -0.0337 -0.00562

3 3 0.0654 0.0956 0.131 0.0698 0.117

4 4 0.0302 0.00609 0.0183 0.0554 -0.00705

5 5 0.0654 0.0467 0.0971 0.121 0.0412

6 6 -0.0260 -0.0173 -0.0375 -0.00194 -0.0291

7 7 0.0841 0.0274 -0.0109 -0.0346 0.0288

8 8 0.0172 -0.0477 -0.0143 -0.00567 -0.0279

9 9 -0.00995 -0.0105 0.0847 0.0298 0.00826

10 10 0.0374 0.000706 -0.0347 -0.0258 0.0136 Again, as I introduced in https://www.psyctc.org/Rblog/posts/2021-02-07-why-pipe-why-the-tidyverse/, I can do multiple functions of the same items

Show code

# A tibble: 10 × 11

session item1_mean item1_sd item2_mean item2_sd item3_mean item3_sd

<int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 1 0.0506 1.18 0.0243 1.19 -0.0401 1.19

2 2 -0.0308 1.14 -0.00961 1.20 -0.0478 1.21

3 3 0.0654 1.23 0.0956 1.21 0.131 1.22

4 4 0.0302 1.26 0.00609 1.17 0.0183 1.23

5 5 0.0654 1.26 0.0467 1.17 0.0971 1.19

6 6 -0.0260 1.20 -0.0173 1.22 -0.0375 1.23

7 7 0.0841 1.19 0.0274 1.16 -0.0109 1.20

8 8 0.0172 1.21 -0.0477 1.20 -0.0143 1.24

9 9 -0.00995 1.20 -0.0105 1.21 0.0847 1.19

10 10 0.0374 1.25 0.000706 1.19 -0.0347 1.23

# ℹ 4 more variables: item4_mean <dbl>, item4_sd <dbl>,

# item5_mean <dbl>, item5_sd <dbl>I like that that names things sensibly

I said the default behaviour of mutate() and summarise() is to work on variables, i.e. vectors, whether that is to work on all the values of the variable if there is no group_by(), or within the groups if there is a grouping. If I want to do something on individual values, i.e. by rows, “rowwise”, then I have to use rowwise() which basically treats each row as a group.

If, as you often will in that situation, you want to use a function of more than one value, i.e. values from more than one variable, then you have to remember to use c_across() now, not across(): “c_” as it’s by column.

You also have to remember to ungroup() after any mutate() as you probably don’t want future functions to handle things one row at a time.

Show code

# A tibble: 5 × 8

session baseVar item1 item2 item3 item4 item5 mean

<int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 1 -1.09 -1.54 -0.487 -2.37 -2.07 -1.23 -1.54

2 2 -1.13 -1.06 -1.53 -0.369 -1.02 -1.57 -1.11

3 3 0.0984 -0.473 1.13 -0.752 -0.617 0.427 -0.0577

4 4 -0.933 -0.426 -1.30 -0.354 -1.41 -1.56 -1.01

5 5 -0.957 -0.734 -0.692 0.417 -2.21 -1.08 -0.860 OK, so that’s recapped these things, now what about if I want to look at multiple columns and multiple rows?

the trick seems to be cur_data().

That gives me a sensible digression from Cronbach’s alpha here as I often find I’m wanting to get correlation matrices when I’m wanting to get alpha (and its CI) and I think getting correlation matrices from grouped data ought to be much easier than it is!

Show code

# A tibble: 1 × 5

item1[,"item1"] item2[,"item1"] item3[,"item1"] item4[,"item1"]

<dbl> <dbl> <dbl> <dbl>

1 1 0.659 0.662 0.664

# ℹ 5 more variables: item1[2:5] <dbl>, item2[2:5] <dbl>,

# item3[2:5] <dbl>, item4[2:5] <dbl>, item5 <dbl[,5]>That, as you can see, is a right old mess!

but we can use correlate() from the corrr package:

# A tibble: 5 × 6

term item1 item2 item3 item4 item5

<chr> <dbl> <dbl> <dbl> <dbl> <dbl>

1 item1 NA 0.659 0.662 0.664 0.655

2 item2 0.659 NA 0.671 0.667 0.664

3 item3 0.662 0.671 NA 0.670 0.653

4 item4 0.664 0.667 0.670 NA 0.656

5 item5 0.655 0.664 0.653 0.656 NA As you see, corrr::correlate() puts NA in the leading diagonal not 1.0. That does make finding the maximum off diagonal correlations easy but I confess it seems wrong to me!

What about using that and group_by()?

# A tibble: 6 × 7

term session item1 item2 item3 item4 item5

<chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 session NA -0.00206 -0.0137 0.000411 -0.00927 -0.0157

2 item1 -0.00206 NA 0.659 0.662 0.664 0.655

3 item2 -0.0137 0.659 NA 0.671 0.667 0.664

4 item3 0.000411 0.662 0.671 NA 0.670 0.653

5 item4 -0.00927 0.664 0.667 0.670 NA 0.656

6 item5 -0.0157 0.655 0.664 0.653 0.656 NA Hm, that completely ignores the group_by() and includes session variable. That seems plain wrong to me. I feel sure this is something the package will eventually change but for now I need another way to get what I want.

I have not evaluated that as it stops with the moderately cryptic error message which I’m putting in here as I quite often forget the summarise(x = ) bit

# Error: `cur_data()` must only be used inside dplyr verbs.

# Run `rlang::last_error()` to see where the error occurred.So let’s fix that.

Show code

# A tibble: 50 × 2

# Groups: session [10]

session cor$term $item1 $item2 $item3 $item4 $item5

<int> <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

1 1 item1 NA 0.678 0.632 0.673 0.659

2 1 item2 0.678 NA 0.631 0.656 0.634

3 1 item3 0.632 0.631 NA 0.649 0.611

4 1 item4 0.673 0.656 0.649 NA 0.650

5 1 item5 0.659 0.634 0.611 0.650 NA

6 2 item1 NA 0.657 0.654 0.683 0.624

7 2 item2 0.657 NA 0.653 0.658 0.644

8 2 item3 0.654 0.653 NA 0.670 0.671

9 2 item4 0.683 0.658 0.670 NA 0.644

10 2 item5 0.624 0.644 0.671 0.644 NA

# ℹ 40 more rowsHm. That does get me the analyses I want but in what is, to my mind, a very odd structure.

OK, after that digression into the corrr package, let’s get to what Emily actually wanted: Cronbach’s alpha across the items but per session.

Show code

# A tibble: 10 × 2

session alpha

<int> <dbl>

1 1 0.902

2 2 0.905

3 3 0.915

4 4 0.910

5 5 0.901

6 6 0.908

7 7 0.912

8 8 0.907

9 9 0.906

10 10 0.908I get my CI around alpha using the following code.

Show code

psychometric::alpha(tmpDat[, 3:7])[1] 0.9074184Show code

[1] 0.9074184Show code

bootReps <- 1000

getCIAlphaDF3 <- function(dat, ciInt = .95, bootReps = 1000) {

tmpRes <- boot::boot(na.omit(dat), getAlphaForBoot, R = bootReps)

tmpCI <- boot::boot.ci(tmpRes, conf = ciInt, type = "perc")$percent[4:5]

return(data.frame(alpha = tmpRes$t0,

LCL = tmpCI[1],

UCL = tmpCI[2]))

}

getCIAlphaDF3(tmpDat[, 3:7]) alpha LCL UCL

1 0.9074184 0.903393 0.9113645Actually, now I have my CECPfuns package I create a better, more robust function for this, but later!

So that’s the overall Cronbach alpha with bootstrap confidence interval.

Can also do that within a group_by() grouping.

Show code

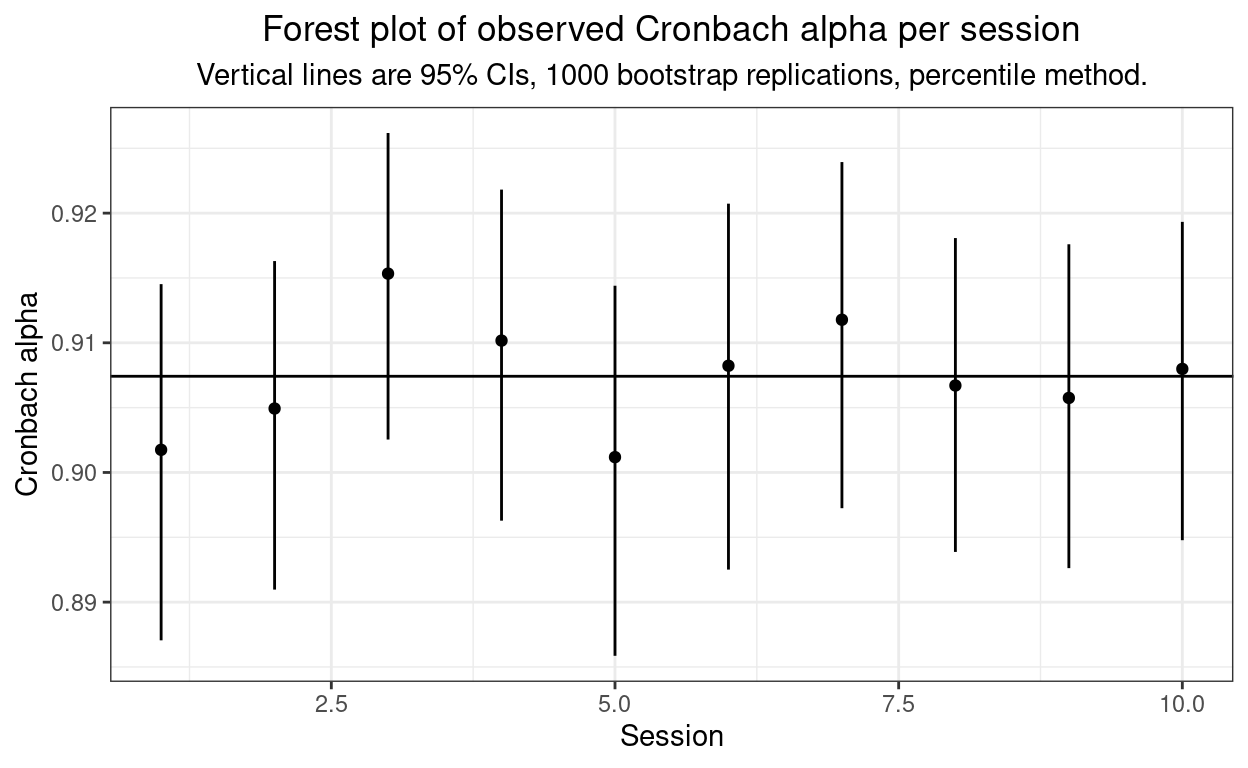

# A tibble: 10 × 4

session alpha LCL UCL

<int> <dbl> <dbl> <dbl>

1 1 0.902 0.887 0.913

2 2 0.905 0.891 0.917

3 3 0.915 0.902 0.927

4 4 0.910 0.896 0.922

5 5 0.901 0.885 0.914

6 6 0.908 0.894 0.920

7 7 0.912 0.897 0.923

8 8 0.907 0.894 0.917

9 9 0.906 0.893 0.917

10 10 0.908 0.895 0.918And that was nice and easy to feed into a forest style plot, as follows.

Show code

tmpDat %>%

select(-baseVar) %>%

group_by(session) %>%

summarise(alpha = list(getCIAlphaDF3(cur_data()))) %>%

unnest_wider(alpha) -> tmpTib

psychometric::alpha(tmpDat[, 3:7]) -> tmpAlphaAll

ggplot(data = tmpTib,

aes(x = session, y = alpha)) +

geom_point() + # get the observed alphas in as points

geom_linerange(aes(ymin = LCL, ymax = UCL)) + # add the CIs as lines

geom_hline(yintercept = tmpAlphaAll) + # not really very meaningful to have an overall alpha but

# perhaps better than not having a reference line

xlab("Session") +

ylab("Cronbach alpha") +

ggtitle("Forest plot of observed Cronbach alpha per session",

subtitle = paste0("Vertical lines are 95% CIs, ",

bootReps,

" bootstrap replications, percentile method.")) +

theme_bw() + # nice clean theme

theme(plot.title = element_text(hjust = .5), # centre the title

plot.subtitle = element_text(hjust = .5)) # and subtitle

Well, as you’d expect from the simulation method, no evidence of heterogeneity of Cronbach’s alpha across sessions!

I hope this is a useful further introduction to piping, dplyr and some of the tidyverse approach. I guess it introduced the corrr package, cur_data() and rowwise() … and it finished with a, for me, typical use of ggplot() (from the ggplot2 package.)

Do contact me if you have any comments, suggestions, corrections, improvements … anything!

page counter