This post is jumping in front of one, perhaps several, about my, our, work trip to Latin America earlier this year.

This one arose because I met with some friends a couple of weeks ago who asked, as I am sure they have before, “What do you do?” Sadly, again as I am sure I did before, I completely failed to give a sensible, succinct answer. I think the follow up question was “Is it all about CORE?” to which I had said something like “Some of it, still rather more than I want I guess” but again I dodged.

This won’t do! What do I do?! Can I for once find a fairly short, comprehensible answer?

No, I’m not good at short and comprehensible for things I care about deeply. Hence this isn’t all that short but it’s not War and Peace. Stay with me please!

My friends are also ex-colleagues but clinical ex-colleagues from when I was working as a therapist, from my best period of clinical work. That was in Nottingham from around 2007 to 2014. They know that I stopped clinical work back in 2016 and know that I have an NHS pension and know that I don’t work for pay now. Finally they know that I still work well over a 40 hour week. Before we come to “what” do I do, let’s look at “why”. What motivates me?

Since 1995 I have said, that my interest has been:

“How do we know, or think we know, what we think we do?”

and that’s been true ever since. Technically I think I am a methodologist but that’s a bit of a technical term and fails to convey the passion I feel about this!

However, it is an accurate label: I work on the methods we use to try to understand more about humans and particularly the methods we use to try to learn more about psychosocial interventions for human distress and dysfunction.

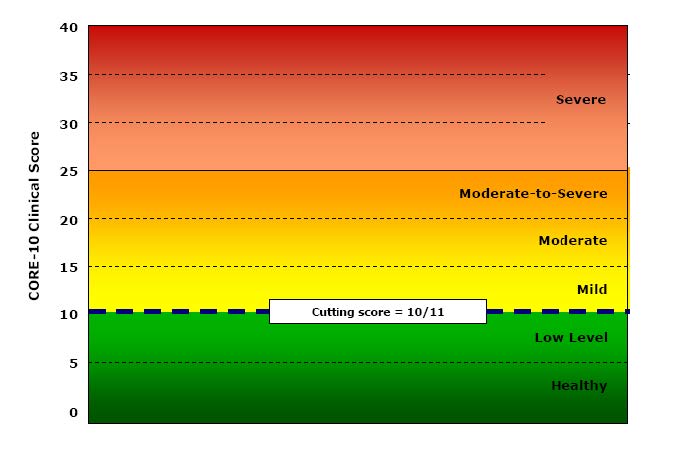

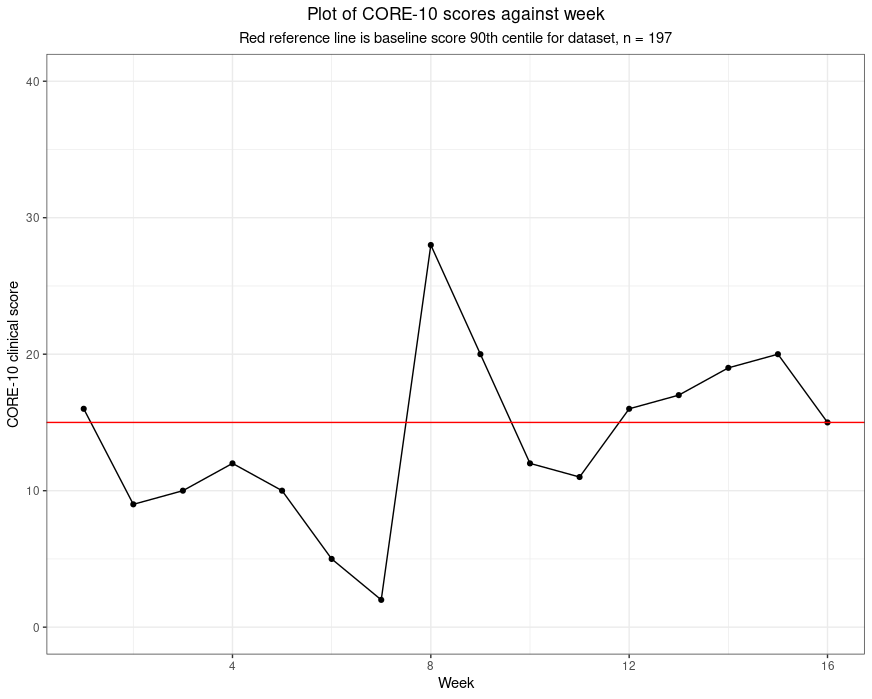

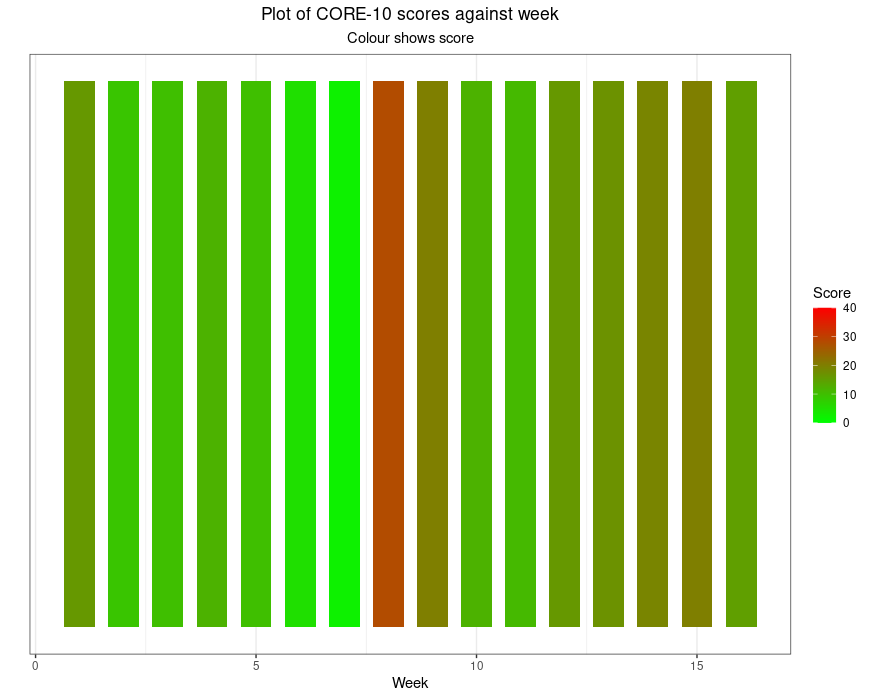

I am particularly interested in “psychometrics”, another technical and dry sounding term. So, coming to what I do: I analyse data mostly but not all from questionnaires. However, I believe strongly that many of the methods we use to analyse such data are widely misused or oversold. So as well as using these methods I also work on our understanding of our methods.

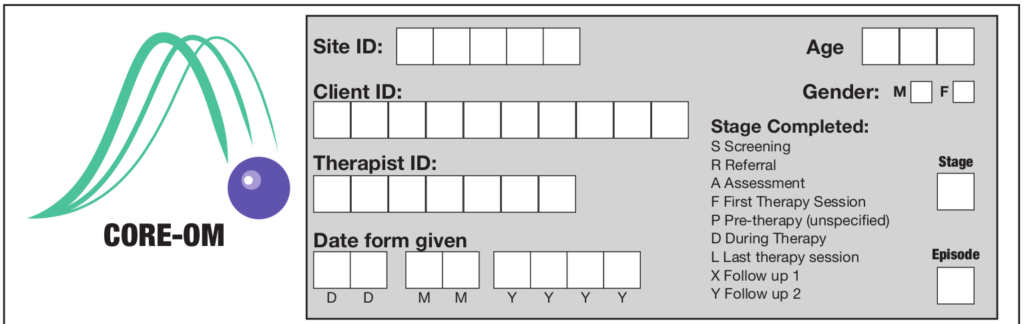

Much of what I do in this area is about the CORE system. That is logical as I was a co-creator of the system and now the main maintainer of its web presence (here). I do also continue to do a very little work around some other measures: the MIS and CAM that I co-developed, the BSQ for which I developed short forms, and I watch what goes on with the SCORE, a measure of family state that again I had a hand in developing. Moving away from more conventional questionnaires, I also work a bit with data from PSYCHLOPS which I helped with in its early stages (but didn’t design) and with data from repertory grids and other idiographic (i.e. purely personal) data collection tools (see the rigorous idiography bit of this site).

But back to this:

“How do we know, or think we know, what we think we do?”

Writing this I realise that I am always trying both to distil out useful findings from such data and to improve our data analytic methods. I do want to have my cake and eat it!

Writing this I realised that there’s always another thread: I want the work to be politically aware. That has changed and developed over the 40+ years I’ve been doing this: at first a critical political concern was there but I was mostly seduced by the excitements of getting data, of sometimes having clear findings but also of learning about increasing diversity and increasing sophistication of the analytic methods. There was too much methodolatry in my work. I saw the methods, particularly statistical, quantitative methods as much more capable of telling us what we should think than they actually are and it stopped me really thinking critically both about the methods and about how they are embedded in power structures, economic differentials, inequities and politics.

How did I get here: personal background

I am the child of a language teacher and a history teacher. The history teacher, my father, moved fairly early in his career from direct teaching to teaching teachers. I think five things came from that heritage.

- A reverence for knowledge, any knowledge, though also for thinking critically.

- An awareness of both language and history as vital aspects of any knowledge.

- An interest in how things are learned, how they are taught and the whole meta-level of how we teach teaching, learn to teach, learn to promote learning.

- An adolescent rebellion that my parents (and my two younger sisters) lived unequivocally in the arts so I would live in maths and “hard sciences”!

However, as I went through eight or so years of medical training and developing, largely self-taught, a fascination with statistical methods I was also, largely without realising it, looping back towards languages and histories. I am sure that brought me to psychiatry and psychotherapy. The rebellion and my loop back were caught beautifully at my interview for the group analytic training when the question I was asked almost before I sat down, by someone looking at my CV,was “Do you think hard science is a phallic metaphor?” I managed not to fall off the chair, took a couple of deep breaths and then almost burst out laughing as the wisdom of the question flooded over me. From there the interview was pretty much a pleasure as I remember it.

So my adolescent rebellion has continued, pretty gently, for another 30 years but I try to weave qualitative and quantitative.

The heritage of my mother’s love of languages meant that from my first use of questionnaires, I was interested in what happens as we move them across languages and cultures. Though I only speak English, poor French and write middling competent work in R, I have co-led over 35 translations of the CORE-OM, the self-report questionnaire for adults and rather fewer translations of the YP-CORE, the 10 item questionnaire for young people (roughly 11 to 20 year olds) and been involved in a few other measure translations. I am still, much of most weeks, working on data from the translation work and writing papers out of that.

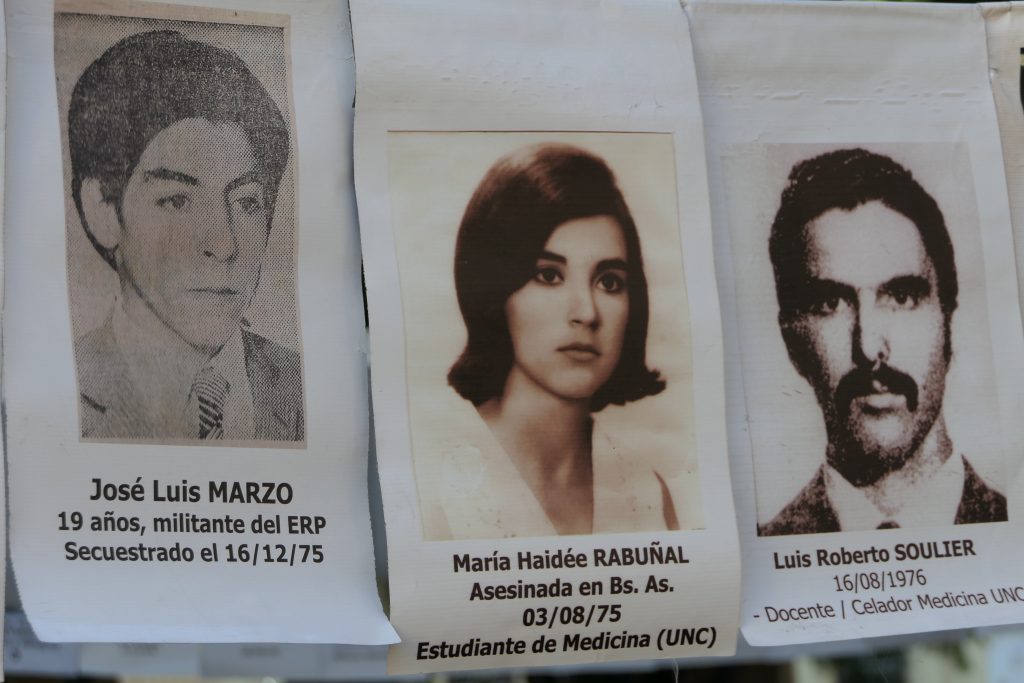

However, that work across languages and across cultures has developed the political side and my awareness of the methodolatry and how so much in our field is oversold, and how we are encouraged to frame what we do and find as certain, as revealing clear and simple answers. I now see most of that as pretty illogical and work on the logic issues. Increasingly I also see it is shoring up economic and political systems that are deeply inequitable, dehumanising and destructive.

So what do I do?!

- I still crunch datasets almost all of them arising from voluntary, very low cost, collaborative work with lovely people in many countries.

- As I don’t have to work for money (thank you pensions) I have the wonderful liberty that I pretty much only work with people I like: people who are not just out for themselves, people who see this work as a relationship not just a contract.

- Whenever I can, I try to ensure our publications don’t overclaim.

- I try to make sure the papers raise questions about the methods we used.

- Sometimes, sadly too rarely, I can write quite polemically about the problems of the methods and the politics behind modern healthcare and higher education, the problems of our societies and politics.

- Sometimes, I hope increasingly, I use simulations and thought experiments to criticise but also to develop our methods.

- Sometimes, but rarely, the work has a qualitative and/or a systemic, cultural, sociological, anthropological theme or themes.

- As well as doing work that ends in papers in the modern academic systems of journals I try, increasingly to create other outputs:

- Web pages of free resources and information that can be a bit more challenging to prevailing orthodoxies than papers or which makes information and methods available to anyone and for as near zero cost to them as possible:

- A glossary complementing the OMbook (“Outcome Measures and Evaluation in Counselling and Psychotherapy“) that Jo-anne & I wrote about measuring change.

- My pages (“Rblog“) using R to explain things in more detail than I do in the glossary.

- Interactive online tools (shiny apps) that people can use.

- A package of R functions that can help people who are not statisticians, not R gurus, use R (a free, open source software system largely for crunching data, including text data).

- I try as in this post, and sometimes bridging across to my personal web pages, to locate this as my thinking but as open thinking, always owing 90-99% to others, sometimes others from centuries ago, sometimes others from very different cultures who spoke and wrote in other languages: that in the “human sciences”, in exploring psychosocial interventions for with, by, from people in distress, perhaps alienated from others, we only have woven understandings, no reductive simplicities or false certainties.

So that’s what I do! Not short but as clear as I could make it.

If you want to get updates, never more than monthly, on what I’m doing you have three options: sign up to one or more of these lists!

- Updates on my non-CORE work (things on this site, my Rblog, my Shiny apps and R package).

- Updates on CORE work I am doing (always with others, many others).

- Updates on my personal/family life (i.e. on my personal website: https://www.psyctc.org/pelerinage2016/).

Copyright and formalities

Created 12/9/24 text by CE licensed under the Attribution 4.0 International (CC BY 4.0). Images a mix of mine and ones I am sure I can use but contact me if you want to use them so you can get the correct attributions.